In addition to this, the fact that Airflow is open source has endeared it to a large community of open source contributors who have collectively created tons of operators for different use cases. Now, those base classes can be extended using inheritance for you to add any other functionality. These differences are handled in Airflow’s operators and sensor base classes. Tasks that wait for an operation to complete, require different scheduling patterns from tasks that perform an operation. Sensor: Tasks that wait for an action to complete (eg: waiting for a spark job to complete).Operator: Tasks that perform an action (eg: submitting a spark job).To allow reusability of commonly occurring use cases, Airflow provides abstractions called operators and sensors.īasically, any task in a workflow would belong to one of the two categories,

As far as we maintain separation of concerns, it becomes possible to reuse these tasks across other use cases. The data ingestion workflow described in the previous section can be modelled as a set of Airflow tasks where each task has a clear separation of concern. Depending on the executor (another neat abstraction in Airflow) being used, these workers could be scaled across machines to support a higher number of concurrent tasks. TLDR Airflow has components that can use a message broker to schedule a task by pushing it into a queue, allowing them to be picked up by task workers. If you are curious about how airflow executes these tasks, do checkout the architecture of Apache Airflow. A task in airflow is a basic unit of execution and forms the nodes of the DAG. One of the key reasons why Airflow is so powerful is it’s abstraction of a task the ability to stitch them together to form dependencies and run them across a cluster of machines. At Locale.ai, we use Airflow extensively to orchestrate our data pipelines and user-facing workflows (shameless plug, check out our new feature - workflows). Notice the arrows around the tasks that signify the dependencies between them. A typical DAG in Airflow with inter-dependent tasks Hence, Airflow models these dependencies as a Directed Acyclic Graph (DAG), such as the one shown below. Note that these jobs are different from vanilla CRONs because they have a dependency on the preceding job being executed successfully. Trigger a spark job to perform transformations.For instance, a workflow can comprise of the following sequential jobs:

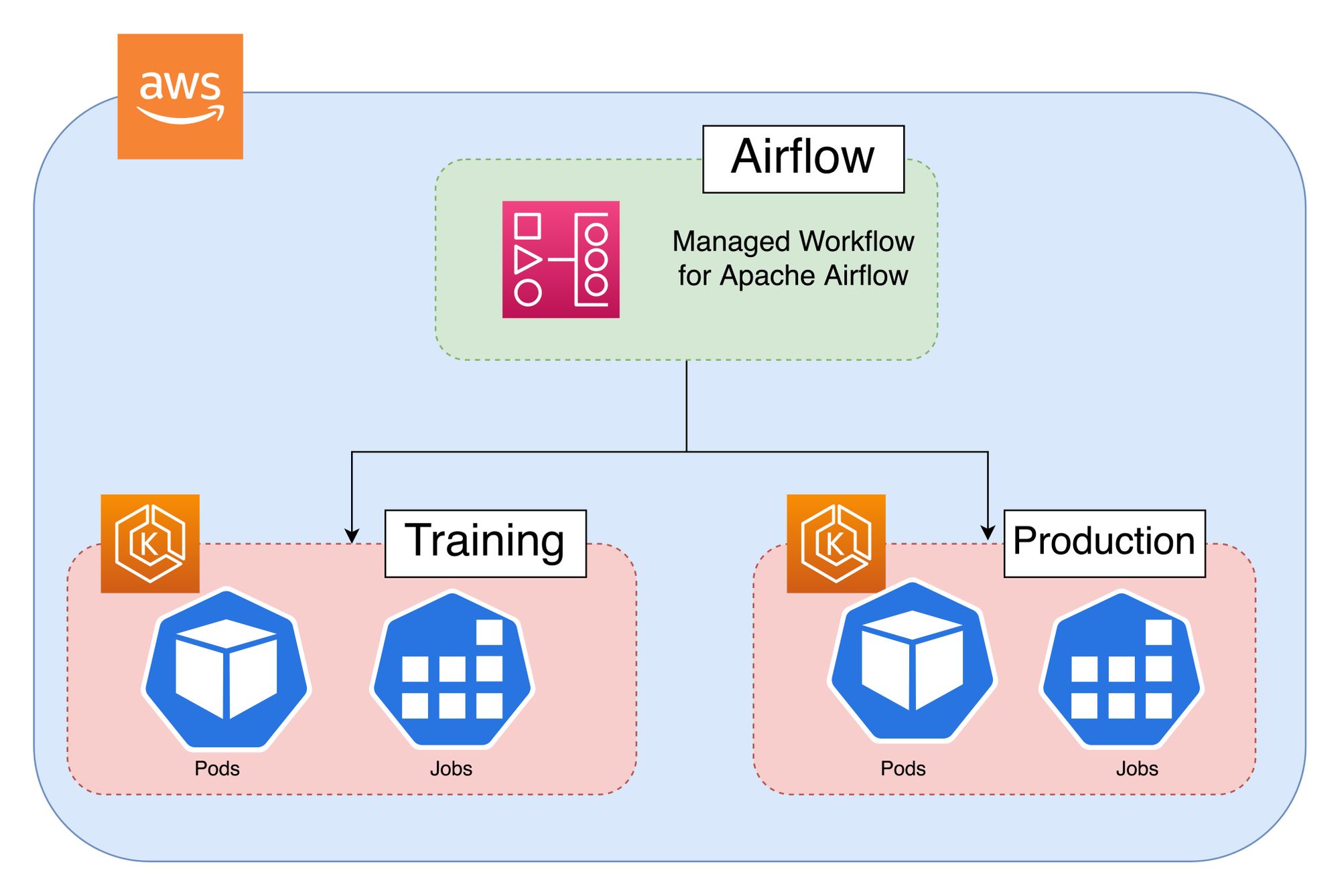

Apache airflow kubernetes operator series#

These jobs can also be scheduled to run periodically.Ī typical example could be a series of interdependent jobs that ingest data into a datastore. A workflow in this context is a series of jobs that need to be executed in a certain topological order. Airflow is an open-source tool that lets you orchestrate workflows.

0 kommentar(er)

0 kommentar(er)